The Subtle Art of Deepfakes: The Rise of Narrative Manipulation in AI

A New Era for Deepfakes

The advent of advanced conversational AI tools, like ChatGPT and Google Gemini, has expanded the capabilities of deepfakes beyond high-profile identity swaps. Now, these technologies can subtly alter the stories within images. By modifying gestures, props, and backgrounds, these innovative edits can easily deceive both artificial intelligence detectors and human observers. This evolution raises the stakes for discerning authenticity online.

Historical Context: Small Changes, Big Impacts

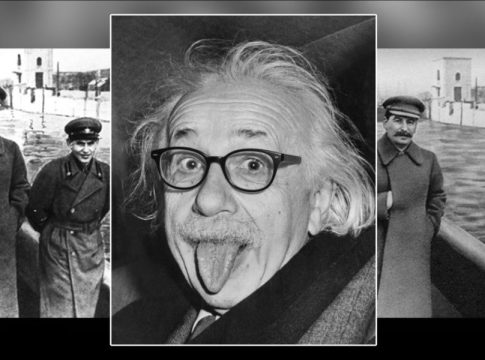

While many associate deepfakes with sensationalized content like non-consensual adult material and political misinformation, history offers a stark reminder of how subtle alterations can also distort reality. During Stalin’s regime, photographic manipulations erased individuals from historical records, illustrating the potential dangers of visual obfuscation. This kind of "gaslighting"—quietly altering images—can be far more insidious than overt face-swapping.

Introducing MultiFakeVerse

Researchers from Australia are tackling this issue head-on by creating the MultiFakeVerse dataset, a significant compilation of image manipulations that shift context, emotion, and narrative without changing a subject’s identity. Drawing inspiration from historical practices, this dataset features over 845,000 images manipulated to subtly influence viewer perceptions, highlighting how finely-tuned edits can change the emotional weight or intent of an image.

AI’s Performance Gap

Testing on this new dataset revealed a sobering truth: both deepfake detection technologies and human participants struggle significantly to identify these nuanced manipulations. In a study, humans classified image authenticity accurately only 62% of the time, while leading detection models performed even worse, often missing the alterations altogether.

Delving Deeper: The Mechanics Behind the Edits

Using advanced tools, researchers employed vision-language models to propose edits that shift perceptions of subjects within images. The adjustments ranged from altering emotions to changing the depicted actions, demonstrating that small tweaks can create profound narrative shifts.

Implications for Society and Media

As AI technologies intensify their role in generating content, the potential for creating subtle misinformation grows. This evolution poses a unique challenge: the conventional methods of detecting blatant manipulations may fall short in an environment where visual lies are small but cumulative.

The MultiFakeVerse dataset serves as a critical reminder that while flashy deepfakes dominate discussions, these quieter edits could have a longer-lasting impact on public perception and trust in media.

Looking Ahead: A Call for New Detection Tools

The research underscores the need for a paradigm shift in deepfake detection systems. Existing methods, trained primarily on overt manipulations, may not adequately address the deceptive power of narrative edits. As we continue to navigate this increasingly complex digital landscape, developing enhanced detection capabilities will be crucial for ensuring a trustworthy online environment.

The challenge ahead is not just to detect obvious changes but to confront the far subtler manipulations that can shape our understanding of truth in society.

Writes about personal finance, side hustles, gadgets, and tech innovation.

Bio: Priya specializes in making complex financial and tech topics easy to digest, with experience in fintech and consumer reviews.