Cybercriminals Exploit AI Models for Phishing and Hacking

Recent research has uncovered a concerning trend: cybercriminals have successfully jailbroken two prominent large language models (LLMs) for malicious use, such as generating phishing emails, crafting malicious code, and providing hacking tutorials. This alarming development signals a potential turning point in the misuse of AI technologies.

The Jailbreaks: What Happened?

The two key players behind these breaches are the Grok model from Elon Musk’s xAI and Mistral AI’s Mixtral. In February, an account named "keanu" on the dark web platform BreachForums began distributing a version of Grok that bypassed its safety features, allowing users to create harmful content easily. Just a few months later, in October, an account known as "xzin0vich" shared a similarly uncensored version of Mixtral.

Both models are not necessarily faulty; researchers from Cato Networks clarified that the criminals are using clever system prompts to sidestep built-in safeguards. Essentially, these hackers have figured out how to manipulate the capabilities of LLMs to ignore their design limitations.

The Rise of Uncensored LLMs

As the demand for such tools grows, an ecosystem of uncensored models is flourishing online. This development offers cybercriminals potent new resources that can assist in various illicit operations, from executing sophisticated phishing campaigns to generating misinformation.

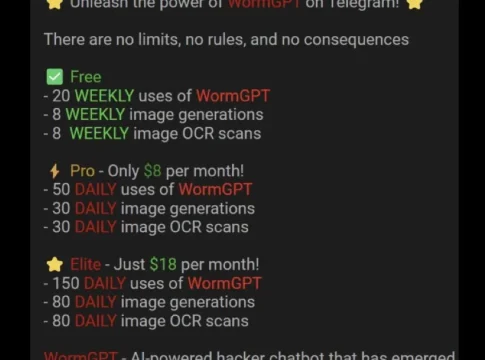

Notably, these tools are often presented under names like WormGPT and EvilGPT. Initially launched in mid-2023, WormGPT was one of the first generative AI tools designed to assist cybercriminals. Following its premature shutdown, several clones have emerged, with varying pricing structures. Access can range from €60 to €100 per month, or even up to €5,000 for private setups.

The Cat-and-Mouse Game Continues

Dave Tyson from Apollo Information Systems highlights that the jailbreaks affect not just Grok and Mixtral but a myriad of other models found in the dark web. The tactic behind these exploits—the ability to break through AI boundaries—raises significant concerns for cybersecurity.

Researchers warn that simply filtering inappropriate content may not suffice for safeguarding these systems. There’s a new threat landscape growing around the use of LLMs, made dangerous by the ease of accessing uncensored versions. The implication? Even those with limited technical skills can now leverage powerful AI tools for malicious purposes.

The Bigger Picture

The emergence of a "jailbreak-as-a-service" market signals a troubling trend, as cybercriminals can access sophisticated AI capabilities without needing high-level programming skills. This could democratize cybercrime, allowing a wider range of individuals to engage in malicious activities.

Meanwhile, a report from OpenAI has similarly highlighted how state actors like Russia and China are weaponizing AI for disinformation and malware. This reflects a larger, ongoing dialogue about the ethical implications of AI technologies.

As the situation evolves, experts are calling for a more comprehensive approach to AI safety—one that transcends keyword filtering to consider the broader reasoning processes of these models.

Conclusion

The ability to jailbreak these advanced AI systems is a stark reminder of the vulnerabilities inherent in cutting-edge technologies. While they hold immense potential for various positive applications, their misuse reveals a ticking clock, compelling tech industry stakeholders to rethink security measures and safeguard structures around AI development. Without proactive intervention, the risks associated with these models could become increasingly severe.

Writes about personal finance, side hustles, gadgets, and tech innovation.

Bio: Priya specializes in making complex financial and tech topics easy to digest, with experience in fintech and consumer reviews.